Schlagwörter

Aktuelle Nachrichten

America

Aus Aller Welt

Breaking News

Canada

DE

Deutsch

Deutschsprechenden

Global News

Internationale Nachrichten aus aller Welt

Japan

Japan News

Kanada

Karte

Karten

Konflikt

Korea

Krieg in der Ukraine

Latest news

Map

Maps

Nachrichten

News

News Japan

Polen

Russischer Überfall auf die Ukraine seit 2022

Science

South Korea

Ukraine

Ukraine War Video Report

UkraineWarVideoReport

United Kingdom

United States

United States of America

US

USA

USA Politics

Vereinigte Königreich Großbritannien und Nordirland

Vereinigtes Königreich

Welt

Welt-Nachrichten

Weltnachrichten

Wissenschaft

World

World News

3 Kommentare

Anyone evil enough to use AI for this purpose isn’t going to be affected by/care about regulation anyway.

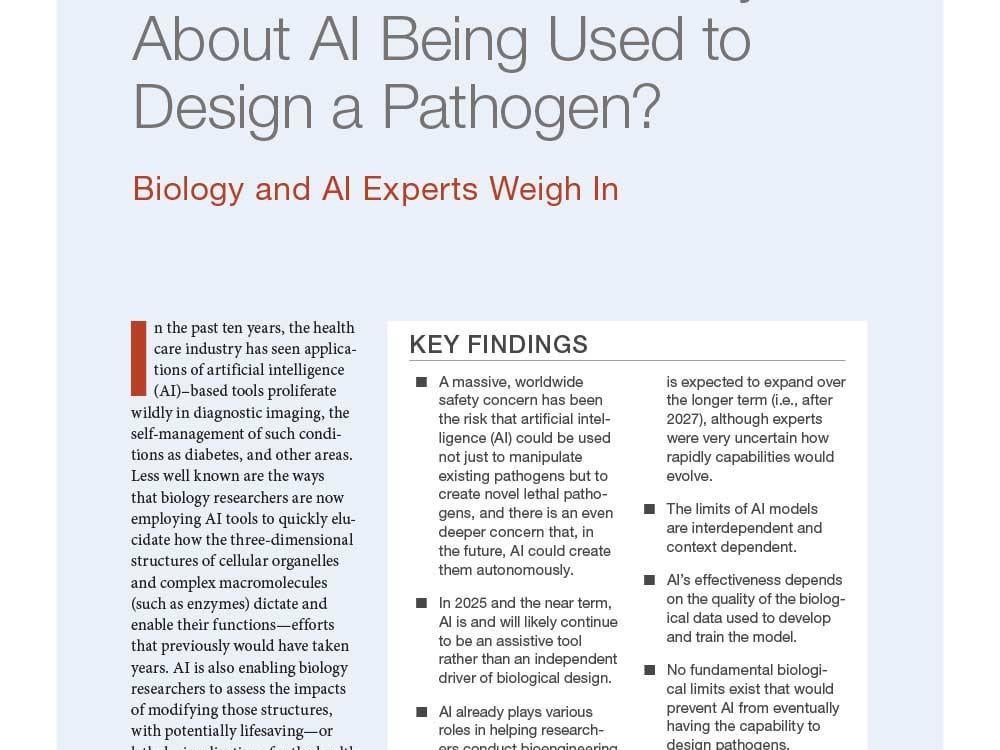

This RAND Corporation report highlights a critical and often overlooked X-risk: the democratization of biological weapon design through Large Language Models. While much of the current AI safety debate focuses on job loss or generalized „alignment,“ this research shows that AI is already capable of closing the technical knowledge gap for bad actors to engineer airborne pathogens.

In the context of the future, this raises massive questions about how we can maintain an „open“ AI ecosystem while preventing the dissemination of lethal biological blueprints. If an AI can act as a virtual lab assistant for a pathogen architect, our current regulatory frameworks for both biotech and AI are effectively obsolete. We need to discuss whether the future of AI safety requires „biological guardrails“ that are just as robust as the digital ones we are currently failing to build. How do we balance scientific progress with the very real risk of an AI-assisted pandemic?

The very fact that this is already out there implies you’ll need massive surveillance on water supplies, crops, and airborne pathogens. Another reason to close the border as well until we set up public safety measures